Data maturity assessment tool guideline

Introduction

The Data Strategy team of the Queensland Government Department of Customer Services, Open Data and Small and Family Business (CDSB) aims to establish a robust Data Capability Framework for the Queensland Government, enabling the delivery of streamlined, secure, and integrated services through the effective utilisation of advanced data and digital capabilities. The Data maturity assessment tool (DMAT) assists agencies in regularly evaluating their data capability and maturity by monitoring progress over time. The Queensland Data strategy (link not yet available) sets the foundation for a dynamic data ecosystem to support world-class services. It focuses on empowering agencies to maximise data use, streamline governance, engage the public, build an inclusive government-wide data ecosystem, and respectfully support Aboriginal and Torres Strait Islander communities.

The DMAT directly supports the Data strategy by providing agencies with a structured approach to assessing and enhancing their data capabilities. It is designed to benefit all agencies and communities, including Aboriginal and Torres Strait Islander communities, by ensuring inclusive and equitable data practices. This strategic vision guides the DMAT in helping agencies strengthen their data capabilities and implement the Data strategy, preparing them to meet their data-related objectives.

Purpose

Organisations that appropriately leverage data will be better placed to achieve strategic, operational, and tactical objectives. It is crucial that an agency has access to data that is fit-for-purpose, demonstrating proficiency and effectiveness.

To support agencies in achieving this objective, CDSB has introduced a tool known as the DMAT for Queensland Government, designed specifically to assess the readiness of an agency’s capability and capacity to effectively use their data. This method of assessing data maturity allows for a comprehensive evaluation of an agency’s data landscape, providing valuable insights into its strengths and limitations.

The primary objective of the DMAT is to evaluate current data management practices, identify areas for improvement, and ensure alignment with Queensland’s governance obligations and policies. It provides agencies with a standardised approach to assess and understand their level of data maturity and capability across the entirety of the data life cycle. Furthermore, it provides a sustainable and consistent method to evaluate the data maturity and capabilities of the Queensland Public Sector (QPS), therefore strengthening its ability to meet Government's data objectives and goals. Agencies will have the capability to monitor their advancements and benchmark their performance against aggregated insights or sector-wide trends derived from the data of QPS agencies, industry norms, and global standards.

The tool is designed to support agencies in advancing the government's data-driven objectives as detailed in the Queensland Data Strategy. It incorporates aspects of the strategy's goals, and its annual findings will provide insight to guide the advancement of other QPS reform initiatives, which prioritise collaboration, a citizen-focused mindset, and organisational integrity. The tool assists the government in identifying specific areas that require improvement by objectively assessing and consistently monitoring the overall data maturity across the QPS. This Information will support accountability, transparency, and cross-agency collaboration. The assessment supports informed decision-making and preparation for future data needs, optimising data management and governance across a linked QPS environment focused on improving data operations, procedures, and infrastructure.

Your agency in action

The purpose of this assessment is to provide a consistent approach across a variety of agencies operating within the public sector in Queensland in the process of continually assessing and reviewing their capabilities in the areas of controlling data risks, integrating systems, organising, governing, structuring, and practical implementation. The goal is to provide guidance to facilitate the development of informed decisions regarding resources and data management. The need for agencies to design or acquire their own evaluation tools is reduced with the availability of this tool, which provides a uniform way for evaluating the maturity of data practices throughout the QPS.

It is not required for an agency to aim for the highest degree of maturity across all its core areas, as every single agency is performing at a distinct point of their path towards data maturity. The assessment should be used by agencies to establish a foundational baseline, and set achievable goals aligned with their individual vision, strategic goals, organisational structure, available resources, and existing maturity levels.

The content and maturity indicators provided by the DMAT may be beneficial to a wide variety of agencies, ranging from the smallest to the largest, with varying frameworks and degrees of complexity alike. The Senior Data Manager or a function that is equal to that role that is responsible for data management within your agency should lead the assessment, drawing on insights and support from other departments as appropriate. Agencies are also required to choose the most effective method for carrying out the evaluation inside their own organisation.

Agencies should submit their data maturity evaluations through their Chief Data Officer (CDO). If a CDO is not in place, the submission should be made through a senior executive responsible for data governance, digital transformation, or corporate services. This ensures appropriate oversight, alignment with the agency’s data management strategy, and contributes to the broader development of data systems within the agency. Agencies can also nominate the most appropriate individual within the agency to complete relevant dimensions as part of the cross functional input.

Assessment method

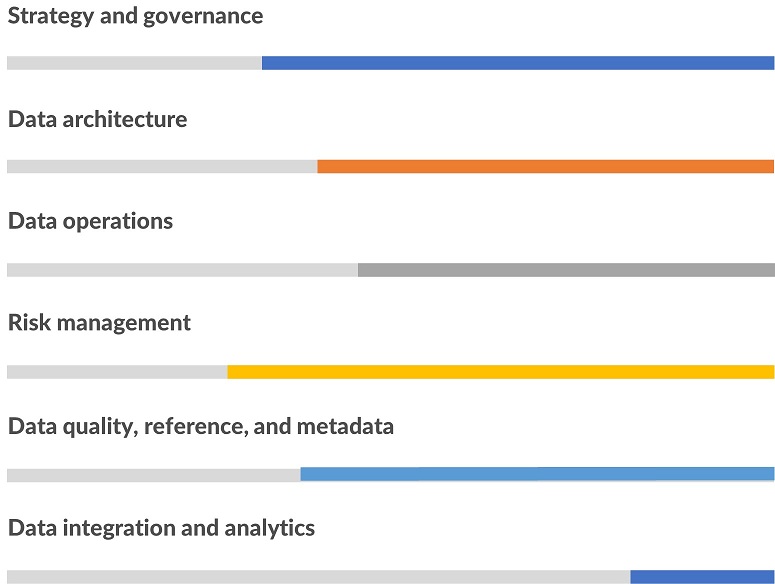

The assessment tool includes topics that overlap with 7 different themes of data management and the architecture of its activities. This involves the issues that relate to data management, including but not limited to:

- Strategy and governance: Focus on evaluating processes and polices related to data usage, consistency, and appropriateness.

- Data operations: Focus on examining the day-to-day data management practices and processes.

- Data quality, reference and metadata: Focus on measuring the accuracy, completeness, and timeliness of data along with how it is described, documented, and referenced.

- Data architecture: Focus on evaluating the organisation’s data storage, management, and organisational practices.

- Risk management: Focus on evaluating data risk management strategies and practices.

- Data integrations and analytics: Focus on assessing data integration capabilities and analytics practices.

Each assessment row provides a description of elements or practices that are associated with advancing from a low level of maturity to a higher level of maturity within a particular subject and theme. This comprehensive view of data maturity is encouraged to promote optimal growth and assessment that spans across all subject areas.

To ensure effective response to the questions, collaboration with field experts and business areas within the agency specialising in data and records management is critical. Responses must be evidence-based, reflecting current practices, policies, or artefacts wherever possible. Utilise information asset registers and adhere to internal governance, such as the Executive Board or Data Governance Committee, for well-founded answers.

Reviewing the results of assessments in line with the agency’s goals and responsibilities enables it to be practical to prioritise resources and provide evidence-based solutions. This guarantees that critical high-maturity components that are required for effective delivery are maintained while also identifying regions of poor data maturity that represent risks to the goals and objectives of the agency.

When providing assessment responses, refer to the end of the most recent financial year as the point of reference. Agencies are encouraged to provide honest and accurate responses to help uncover actual gaps, giving us the opportunity to offer focused support and resources that effectively address the following areas:

The maturity assessment offers a matrix-based overview covering diverse components and themes, revealing varying maturity levels across domains that may highlight specific patterns. Agencies should conduct this evaluation accurately, drawing from reliable sources and showcasing a deep comprehension of their internal information and data management.

For every question, you have a choice to select from six maturity levels, each with a corresponding score ranging from Unmanaged (0) to Optimised (5). Select the response that most accurately represents the attributes or activities reflecting an advancement from a lower to a higher level of maturity. Appendix A provides a general summary of what the ratings indicate. The maturity scale will denote different meanings depending on the specific question or area of focus.

Note: Only subject matter experts (SMEs) relevant to each focus area should respond to the corresponding questions. A list of recommended SMEs for each focus area is provided in Appendix D.

Stages of a data maturity assessment

Below outlines the four stages in implementing a DMAT.

Responsibility

- Primarily agency led

Reasoning

- Agencies have the best understanding of their own data landscape.

- Agencies possess institutional knowledge of critical assets and operational priorities

CDSB’s role:

- Offer guidance and support.

- Ensure agencies accurately define and prioritise their data assets

Responsibility:

- Primarily agency led.

Reasoning:

- Agencies know their internal teams, workflows, and accountability mechanisms.

- Agencies can identify and document roles based on their organisational structures and governance frameworks.

CDSB’s role:

- Provide a framework or checklist to help agencies map roles effectively.

- Ensure alignment with best practices in data governance.

Responsibility:

- Collaborative effort (Agency collaboration and CDSB-led).

Reasoning:

- Agencies engage internal stakeholders to gather insights on staff perceptions, pain points, and cultural challenges.

- Agencies provide access to staff and processes, ensuring a thorough evaluation of organisational context.

- Agencies identify key stakeholders, resources, and processes for comprehensive reflection.

CDSB’s role:

- Leverage expertise, tools, and frameworks for an objective assessment.

- Ensure unbiased, standardised evaluations and comparisons with benchmarks or target states.

Note: Stage 4 falls outside the standard engagement and is the agency's responsibility unless additional support is formally agreed.

Responsibility:

- Agency-led initiative and involves independent implementation based on the outcomes of the DMAT report.

Reasoning:

- Agencies are responsible for implementation, which includes cultural shifts, resource allocation, and process changes.

- Ownership of this step ensures alignment with the agency's specific needs and environment.

CDSB’s role:

- CDSB can provide assistance only at the request of the agency and based on further discussions regarding engagement terms, capacity, and funding.

If engaged, CDSB may:

- Facilitate the creation of actionable roadmaps based on DMAT findings.

- Provide tailored recommendations on training and resource requirements.

- Offer ongoing support during the implementation phase.

Completing the assessment

Entities are encouraged to complete the DMAT annually if it is:

- a Queensland state government agency

- an entity within the Queensland Public Sector (QPS) responsible for their data management practices

- a statutory body and authority established by Queensland legislation.

It is recommended that the time allocated for entering the responses to the assessment be anywhere between one and two hours on average. The assessment must be approved by the Executive Director which should be completed within a 4-week timeframe. This approval assures the Queensland Government Data and Digital Government that the tool has been accurately completed in alignment with governance obligations and Queensland Government Enterprise Architecture (QGEA) policies. The CDO, or if unavailable, a senior executive such as the Corporate Deputy Director-General or Executive Director, oversees the completion of the assessment to ensure alignment with the agency’s data strategy and governance framework. It should involve input from the individuals (Data Stewards / Data Managers / Subject Matter Expertise) responsible for data management within your agency, with support from relevant departments or business units. The officer completing the assessment must allow all consulted areas to review final responses for accuracy, ensuring accountability for the responses that fall with the respective areas.

Multi-divisional assessment coordination

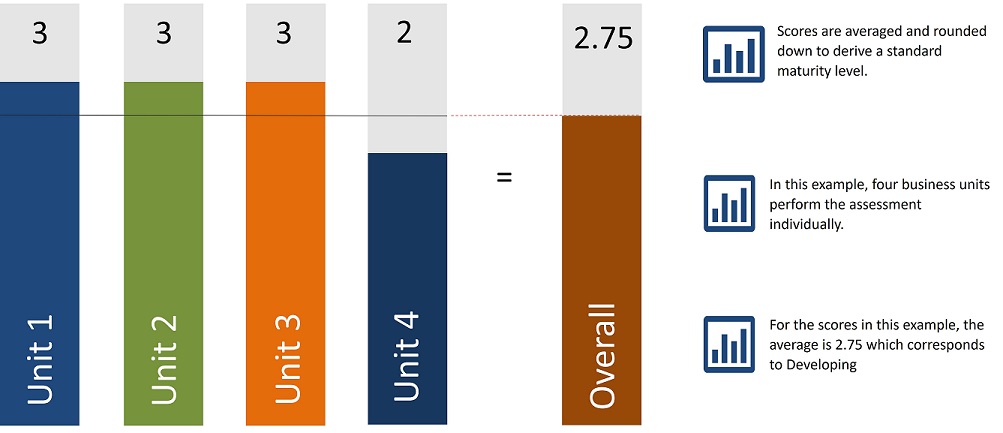

Average method

If various units within an agency individually conduct the assessment, their scores may be combined to determine an average overall maturity score for the agency.

An agency-wide maturity score can be attained by calculating the average of these individual scores in the following way:

The visual depiction illustrates four separate business units within an agency each performing the assessment individually and their scores being combined to determine an average score. This method highlights both strengths and weaknesses, encouraging continuous improvement across all units.

If agencies choose to use an alternative approach for calculating the average score across multiple business units, they should clearly communicate this approach to the Data Strategy team when submitting their results. Providing a brief rationale for the chosen method will help ensure transparency and consistency in interpreting the scores across agencies.

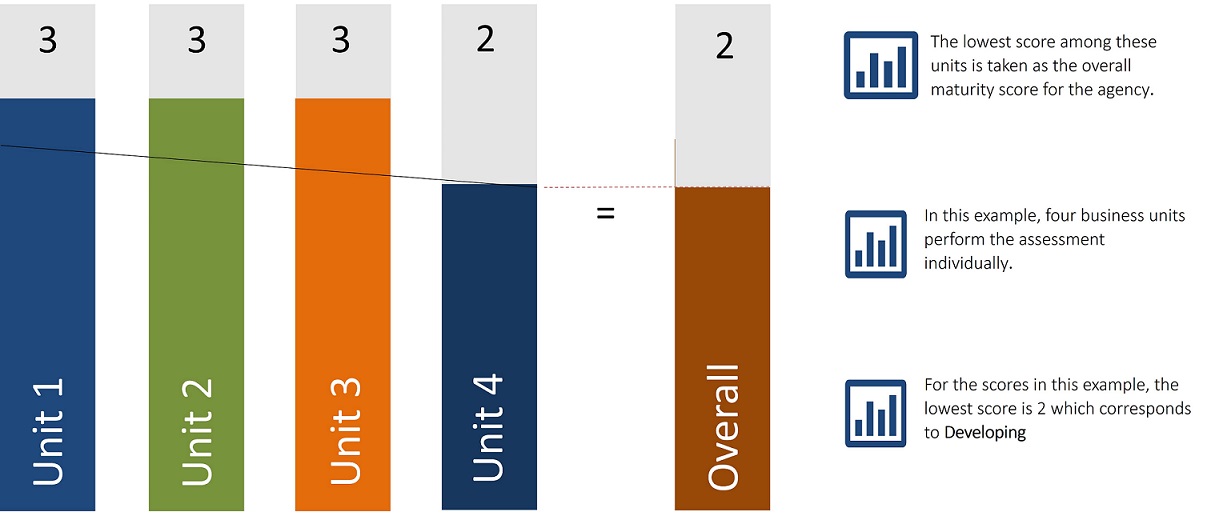

Lowest common denominator method

If agencies opt to use the lowest common denominator approach, the calculation is done as follow:

To calculate the agency-wide maturity score using the lowest common denominator approach, each business unit's individual assessment score is compared, and the lowest score among them is selected as the overall maturity score for the agency. This method ensures that the agency's maturity level reflects the unit with the most significant challenges, highlighting areas that require the most attention. This helps prioritise resources and efforts to address the weakest areas first.

Resources to complete the assessment

Agencies are encouraged to ensure the assessment is conducted precisely, drawing on the most reliable sources and informed by their existing knowledge of data and information management practices specific to their context. Where feasible, agencies ought to maintain a log of the sources referenced during the assessment process. During the assessment process, agencies are required to cross-check and evaluate relevant policies, frameworks, and guidelines to confirm that their data management practices adhere to these established standards.

Such references could include:

- strategies, policies, and governance processes relevant to Queensland Public Sector (e.g. Information governance policy, National Privacy Principles (NPPs), Information Privacy Principles (IPPs), Information Management Policy Framework (IMPF), Information Access and Use Policy (IS33), internal policy risk management framework etc)

- registers (e.g. asset register, information register, reference data register etc)

- reports

- approvals and audit documentation

- system procurement

- system performance monitoring documentation

- business rules and logics

- metadata documentation, reference data documentation and other applicable sources.

Agencies are encouraged to approach the assessment accurately to ensure the results are realistic, practical, and valuable. Providing accurate responses not only helps in painting a true picture of current capabilities but also ensures that any insights gained are actionable, enabling targeted improvements and effective resource allocation.

Confidentiality of assessment information

Handling of agency data - DMAT results

All data submitted through the DMAT will be treated with strict confidentiality and used solely to inform agency-level capability uplift and whole-of-government strategic planning. Only the CDSB Data Strategy Team will have access to the responses, allowing us to analyse and consolidate findings into summaries that reflect agency-wide and Queensland Government-level data maturity.

The information collected will be used to:

- provide individual agencies with a clear understanding of their current data maturity and tailored recommendations

- inform whole-of-government trends and identify common capability gaps across the public sector

- support the planning of coordinated uplift initiatives, recommended training programs, and tailored support for agencies.

- enable agencies to monitor progress and benchmark performance against sector trends, peer insights, and recognised industry standards.

The information collected will not be used to:

- publish or share individual agency responses

- attribute specific data or insights to any agency without their explicit consent

- support compliance activities, performance management, or external reporting

- compare agencies or rank their performance in any way.

Each agency completing the DMAT will receive a summary report that provides a comprehensive view of their data management practices, along with tailored recommendations, tools, and training opportunities to support capability uplift. Additionally, the Data Strategy Team will analyse the aggregated results to assess overall data maturity against best practices across the Queensland Government, helping to build a complete and strategic picture of data capability at the whole-of-government level.

Where high-level insights are shared across government, they will be presented in an aggregated and de-identified format, focusing on system-wide trends. To protect anonymity, any high-level or cohort-level reporting (e.g., by agency type or size) will only be shared when sufficient responses exist to ensure confidentiality. Identifiable data or insights will only be shared with the agency’s express consent. We are committed to ensuring that individual agency results will not be used to make comparisons between agencies or to rank performance in any form.

This approach ensures agencies can engage in the DMAT process with confidence, knowing that their data is protected, used responsibly, and supports the collective goal of strengthening data capability across the public sector. Over time, agencies who feel confident and wish to share their experiences, insights, or results more openly will be supported to do so on a voluntary basis, fostering a culture of collaboration and shared learning across the sector.

Assessment outcomes and improvement strategies

The Queensland Government’s DMAT is designed to give agencies a clear view of their current level of data maturity, their data capabilities, and uncover growth opportunities. The DMAT results help agencies to understand where they stand in their data journey, identify critical gaps, and create a pathway for developing robust data practices. This tool is intended to assist agencies in forming targeted strategies, supporting data-driven decisions, and uphold integrity and accountability in data management practices.

Decoding DMAT results

The DMAT provides each agency with a detailed report, summarising performance across various focus areas, alongside insights into specific strengths and potential improvement areas. Every agency has its own unique goals, risks, and operational environments, which means there isn’t a “standard” maturity level that applies universally. Instead, agencies are encouraged to use their results as a guide to determine the most suitable maturity level that aligns with their goals and data responsibilities.

Examples of contextual needs:

- Scenario A: An agency involved in managing personal information may find that it requires more stringent data governance controls to meet privacy and security expectations. In this case, a higher score in data protection practices would be critical.

- Scenario B: A smaller agency with limited data-sharing responsibilities may determine that moderate maturity levels in certain areas are enough to meet their operational needs, avoiding the resource demands of aiming for maximum scores across all domains.

- Scenario C: An agency that regularly conducts complex data analysis may need high data quality and accessibility standards. A moderate score in data governance might limit their ability to derive timely insights, so they may prioritise improving data integration and quality controls to support analytical precision.

- Scenario D: For an agency providing direct services to citizens, mature data governance and security protocols are crucial to protect personal information and maintain public trust. Lower maturity in security controls increases the likelihood of data privacy risks, which could ultimately impact citizen trust if vulnerabilities are not addressed.

Turning insights into actions

Agencies can take several steps based on their DMAT results to drive practical improvements:

- Developing focused strategies: Agencies can use their outcomes to create action plans for bridging any identified gaps, ensuring that their data practices align with organisational objectives and operational requirements.

- Supporting a culture of data responsibility: By sharing the assessment insights with leadership, agencies can encourage stronger governance, align on clear data policies, and inspire a commitment to data responsibility across teams.

- Building capacity and addressing skill gaps: Identifying areas where capability can be strengthened helps agencies to invest in training, development programs, or new technologies that advance their data practices.

Creating a network for shared learning

A collaborative approach to data maturity can benefit all Queensland Government agencies. By establishing a shared community of practice, agencies can exchange knowledge, compare progress, and collectively work toward improving data management practices. This network enables agencies to share solutions, discuss best practices, and align on common goals, ultimately creating a stronger and more consistent data culture across the government.

The DMAT supports agencies to:

- strengthen data and analytical capabilities

- drive innovation through enhanced data insights

- foster a culture of data responsibility and stewardship

- improve data governance and accountability

- promote collaborative data practices across agencies

- enable continuous improvement in data management

- align with government-wide data strategy objectives

- inform policy development with data-driven insights

- establish a clear roadmap for advancing data maturity.

Improvement strategies to elevate agency maturity

Achieving higher levels of data maturity requires a balanced focus on a variety of elements, including data strategy alignment, governance practices, technology infrastructure, and effective data operations. Agencies can drive meaningful progress by taking a strategic, flexible approach that aligns with their unique goals, risk profiles, and operating environments.

An agency can make significant progress in its data maturity by:

- Progressing through maturity stages: Agencies can build foundational capabilities and move systematically through each maturity level, ensuring that they acquire the essential skills, technology, and processes needed at each stage.

- Focusing on targeted improvements: Agencies may choose to enhance specific capabilities or focus areas that align closely with their objectives or address immediate needs. This flexible approach allows agencies to make measurable progress without waiting to advance on all fronts simultaneously.

Targeted strategies for data maturity improvement

Once the agencies recognise areas needing improvement, they can leverage DMAT assessment results to launch focused enhancement initiatives. Some of the potential strategies include:

- Strengthening data policies and procedures: Use assessment insights to adjust policies and practices to better support data quality, security, and accessibility. This can include revising data handling procedures, establishing clearer data-sharing protocols, or updating data governance frameworks based on the guidelines.

- Developing a continuous improvement plan: Agencies can establish a roadmap for continuous data management improvement, focusing on regular reviews of data processes, practices, and technologies to ensure they keep pace with emerging needs and standards.

- Engaging in peer learning and collaboration: By joining cross-agency working groups or communities of practice, agencies can learn from one another’s experiences, share successful data management strategies, and collaborate on solutions to common challenges.

- Implementing data quality assurance programs: Regularly assess data accuracy, completeness, and timeliness to improve data integrity. This can involve periodic data audits, automated quality checks, or training staff on best practices in data entry and management.

- Leveraging modern data tools and technologies: Explore and implement advanced technologies, such as data cataloguing, data lineage, and analytics platforms, to enhance data accessibility, traceability, and usability. Investing in these tools can also streamline data workflows and improve reporting capabilities.

- Prioritising security and compliance: Agencies should develop or refine security protocols, data encryption methods, and access controls to protect sensitive information. Staying informed on regulatory changes and regularly updating compliance measures ensures data remains protected and risks are minimised.

- Building data literacy across teams: Introduce data literacy programs to help staff understand the value of data, interpret data correctly, and apply insights responsibly. Data literacy initiatives can foster a data-driven culture and enhance decision-making at all levels.

Further steps to support data maturity

To support ongoing improvement, agencies are encouraged to consider:

- Annual data maturity review: Conduct an annual review of data maturity progress, adjusting improvement plans based on the latest needs, goals, and performance data.

- Seeking external expertise: When needed, agencies can engage external advisors or auditors to provide objective insights, validate progress, and offer guidance on further improvement steps.

- Staying updated on data governance and technology trends: Keep informed about emerging data governance best practices, new technologies, and legislative changes that impact data management.

By taking these steps, Queensland Government agencies can build robust data capabilities that enhance decision-making, reduce risks, and align with the broader goals of public service excellence and accountability. Agencies seeking additional guidance or resources can connect with the Data Strategy Team for further support on their data maturity journey.

Appendices

The maturity levels denote different meanings depending on the specific question or area of focus.

Level 5: Optimised

Continuous improvement and innovation in data management practices

- The agency excels in maximising data usage and management.

- Continuous improvement and innovation in data management practices, with regular review and updating of documentation.

- Data is integral to all aspects of operations, supporting real-time decision-making, innovation, and adaptability.

- Best practices are consistently refined and applied to ensure alignment with organisation’s standards and benchmarks.

Level 4: Managed

Implemented in all areas, managed based on measures

- Data management practices are standardised and consistently applied throughout the agency based on measures.

- Metrics and benchmarks are used to regularly monitor and optimise data performance.

- Data management is proactive and integrated into daily operations.

- Data-driven insights inform strategic decisions and drive operational efficiencies across all functions.

Level 3: Defined

Established at the group level, being implemented in some areas

- Formal processes are established to manage data across the agency, including at the group, division, or unit level.

- A clear strategy for effective data use and management is in place, and clear roles and responsibilities for data management are defined.

- Data is integrated into the agency's vision and strategic initiatives, improving decision-making and operational effectiveness.

- Policies and procedures are implemented in some areas with increasing consistency.

Level 2: Developing

Building a whole-of-group process with documented policies and procedures

- The agency recognises the value of effective data usage.

- Data management efforts are more coordinated and involve multiple departments.

- Progress is noticeable in certain areas, leading to enhanced operational efficiencies within those domains.

- Initial steps towards standardisation and repeatability.

Level 1: Initial

Happening in some business lines or occurring in pockets

- There are occasional efforts to improve data use, but these are not yet coordinated across the agency.

- Data management practices occurring in isolated pockets to achieve specific goals, but a unified strategy for enterprise-wide data management is lacking.

- Some documentation exists but is not comprehensive.

Level 0: Unmanaged

No documented policy or process in place

- Data management practices are informal, inconsistent, and reactive.

- Decisions are made on an ad hoc basis without a structured approach.

- Data is primarily used to retrospectively justify actions rather than strategically plan for future improvements.

Terms | Definition |

|---|---|

Data | The representation of facts, concepts or instructions in a formalised (consistent and agreed) manner suitable for communication, interpretation or processing by human or automatic means. Typically comprised of numbers, words or images. The format and presentation of data may vary with the context in which it is used. Data is not information until it is used in a particular context for a particular purpose. Examples: Coordinates of a particular survey point; Driver licence number; Population of Queensland; Official picture of a minister in jpeg format. Note: The DAT Act defines data as meaning any information in a form capable of being communicated, analysed, or processed (whether by an individual or by computer or other automated means). |

Personal information | Personal information is information or an opinion, including information or an opinion forming part of a database, whether true or not, and whether recorded in a material form or not, about an individual whose identity is apparent, or can reasonably be ascertained, from the information or opinion. (Information Privacy Act 2009, S12) |

Data management | Data management is the process of collecting, organising, storing, and maintaining data to ensure it is accurate, secure, and accessible for analysis and decision-making. It encompasses a range of practices, tools, and policies designed to manage data throughout its lifecycle, enabling organisations to treat data as an asset and use it effectively to support their goals. |

Data life cycle | The data lifecycle refers to the stages that data goes through, from its creation or collection to its eventual disposal or archiving. It typically includes phases such as data generation, storage, use, sharing, analysis, maintenance, and final deletion or preservation. |

Data strategy | A Data strategy is a long-term plan that outlines an organisation’s vision for collecting, storing, sharing, and using its data. It defines the technology, processes, people, and rules required to manage an organisation’s information assets. For example: An agency may develop a data strategy to improve service delivery by using customer data to better understand public needs. This strategy might include setting up data collection practices, investing in data analytics tools (like Power BI, Tableau etc for visualising data insights), and setting goals to improve response times based on data findings. |

Data governance function (DGF) | Data governance function (DGF) is a crucial function that promotes the availability, quality, and security of an organisation’s data through various policies and standards. It defines who within an organisation has authority and control over data assets and how those data assets may be used. |

Data governance framework | Data governance framework is a structured approach that outlines the policies, processes, and responsibilities necessary for managing an organisation’s data. It ensures that data is accurate, accessible, consistent, and secure throughout its lifecycle. For example: Assigning data stewards to manage data quality and access, ensuring sensitive data is protected and up to date. Implementing a policy framework for data privacy and compliance to meet legal standards and build public trust etc. |

Data architecture | Data architecture is a systematic structure outlining an organisation's data storage, management, and use and re-use processes. An organisation's data architecture ensures that information is organised, safe, and available for better decision-making and business operations by outlining the processes for data collection, storage, processing, and access. For example: Using a data warehouse (like Snowflake) to centralise and organise data from different departments/units for unified access. Implementing ETL processes to integrate data from various systems, ensuring it’s ready for analysis and decision-making. |

Data operations | Data operations focus on the practices and processes involved in managing and optimising the flow of data within an organisation. It aims to enable organisations to deliver increased and reliable data and insights fostering a culture of continuous improvement and innovation. For example: Real-time data processing to determine the agency's ability to process data in real time through streaming technologies (e.g., Apache Kafka, Apache Flink etc), evaluate cybersecurity practices including role-based access control, regular security audits, and incident response protocols, determine whether version control systems (e.g., Git) are used to track and manage changes in data models. Monitoring data pipelines to ensure that any issues with data flow are quickly resolved, preventing disruptions in data availability etc.) |

Data risk management | Data risk management refers to the process and strategies implemented by organisations to identify, assess, mitigate, and monitor risks associated with their data assets. It involves proactive measures to safeguard data integrity, confidentiality, availability, and compliance with relevant regulations. For example: Conducting regular security audits to identify potential vulnerabilities and strengthen data protection measures. Implementing data access controls to restrict sensitive information to authorised users only, reducing the risk of data breaches etc. |

Data quality | Data quality refers to the reliability, accuracy, consistency, relevance, and completeness of data. It is a measure of how well data meets the requirements or expectations of its intended use. High-quality data is crucial for making informed decisions, conducting accurate analysis, and ensuring the reliability of business processes. For Example: Running regular data validation checks to catch and correct errors, ensuring data remains accurate and trustworthy. |

Reference data | Reference data refers to standardised sets of information used to categorise or classify other data elements within an organisation. It serves as a framework for interpreting and integrating different datasets across systems and applications. For example: Using standardised codes for regions or departments (e.g., "QLD" for Queensland) so data is consistent across all reports and systems. |

Metadata | Metadata refers to data that provides information about other data. It describes various aspects of data assets, such as their content, structure, context, and relationships. It plays a crucial role in managing and understanding data throughout its lifecycle, facilitating data discovery, integration, and usability. For example: Creating metadata tags for datasets (e.g., “source,” “last updated”) to make it easy for users to understand and locate relevant data. Documenting data lineage to show where data came from and how it has been transformed, which helps users trust and interpret data. |

Data integration | Data Integration refers to the process of combining data from different sources into a unified and coherent view. For example: ETL processes: Extracting data from various databases, transforming it into a consistent format, and loading it into a centralised data warehouse like Snowflake or Google BigQuery. |

Data analytics | Data analytics involves the use of techniques and tools to analyse data sets and extract meaningful insights. For example: Using dashboards in PowerBI to summarise historical sales data and understand past performance through descriptive analytics. Using analytics to identify trends in hospital admissions, helping streamline services and reduce wait times for patients. |

Focus area | Focus area overview

| Who should answer the questions? |

|---|---|---|

Data strategy and governance | This focus area assesses how well an agency’s data strategy aligns with its organisational goals. It also evaluates the presence and effectiveness of governance frameworks that ensure data is managed responsibly, securely, and in compliance with regulations. | Chief Data Officer (CDO) (If available) Data Governance Lead/Manager Enterprise Architects (If available) Senior Executives (CIO, Head of Strategy, Digital Leadership Team) |

Data architecture

| This area examines how well an agency's data systems are designed and structured to support both operational and strategic needs. It focuses on data models, infrastructure, interoperability, and scalability. | Enterprise Data Architects/Solution Architects (If available) Technology and IT Strategy Teams Data Engineering Leads |

Data operations

| This focus area evaluates the effectiveness of an agency’s day-to-day data management practices, including data storage, access, processing, and maintenance. | Data Operations Manager/Data Services Teams IT Infrastructure and Support Teams System Administrators |

Data risk management

| This area examines how agencies identify, assess, and mitigate risks related to data security, regulatory compliance, and operational disruptions. | Chief Information Security Officer (CISO) (If available)/Cyber Security Teams Risk and Compliance Teams Legal/Regulatory Affairs Officers |

Data quality

| This focus area assesses whether data is accurate, complete, consistent, and reliable enough to support decision-making and business processes. | Data Governance Lead/Data Stewards Business Intelligence (BI) Teams Data Analysts/Quality Assurance Teams |

Reference data and metadata management

| This area evaluates how well agencies manage reference data (standardised values used across systems) and metadata (descriptive information about data) to improve data understanding and interoperability. | Data Governance Lead/Data Stewardship Teams Master Data Management (MDM) Teams (If available) Data Cataloguing and Metadata Managers (If available) |

Data integration and analytics

| This focus area assesses an agency’s ability to integrate data from various sources and leverage analytics to generate meaningful insights for decision-making. | Data Engineers/Data Integration Teams Business Intelligence (BI) and Analytics Teams Data Scientists/Application Development Teams (If available) |

Data governance

- Information Privacy Principles (IPPs)

(Note: References to the IPPs in this document will be updated to reflect the Queensland Privacy Principles (QPPs) following the commencement of the IPOLA Act 2023.) - Information Management Policy Framework (IMPF)

- Information governance policy

- National Privacy Principles (NPPs)

(Note: References to the National Privacy Principles (NPPs) in this document will be updated to reflect the Queensland Privacy Principles (QPPs) following the commencement of the IPOLA Act 2023.) - Data governance guideline

- Information access and use policy

- Implementing information governance guideline

Data operations

Data risk management

- Information and cyber security policy (IS18)

- Information security classification framework

- A Guide to Risk Management

- ICT risk management

Data quality

- Information quality framework guideline (For reference only - document has been repealed)

- Data quality assessment tool (DQAT)

(Disclaimer: As part of the IMPF refresh, we are exploring the adoption of ISO 8000 Data Quality standards.) - DQAT Guide

Reference data and metadata management

- Metadata lifecycle management (For reference only - document has been repealed)

- Metadata management principles

Stages of DMAT

Stage 1: Identify and define data assets

- Information asset custodianship policy

- Information security classification framework (QGISCF)

- Metadata schema for Queensland Government data assets guideline

- Data governance guideline

- Information assets and their classification (For reference only - document has been repealed)

- QGEA Information quality framework (For reference only - document has been repealed)

Stage 2: Map data roles and responsibilities

- CDSB Data Governance Framework (Shared upon request - contact QGCDGDataStrategy@chde.qld.gov.au)

- CDSB Data Governance Policy (Shared upon request - contact QGCDGDataStrategy@chde.qld.gov.au)

- Data Job Role Persons | Australian Public Service Commission